AI, Localization, and the Shifting Meaning of “Good Enough”

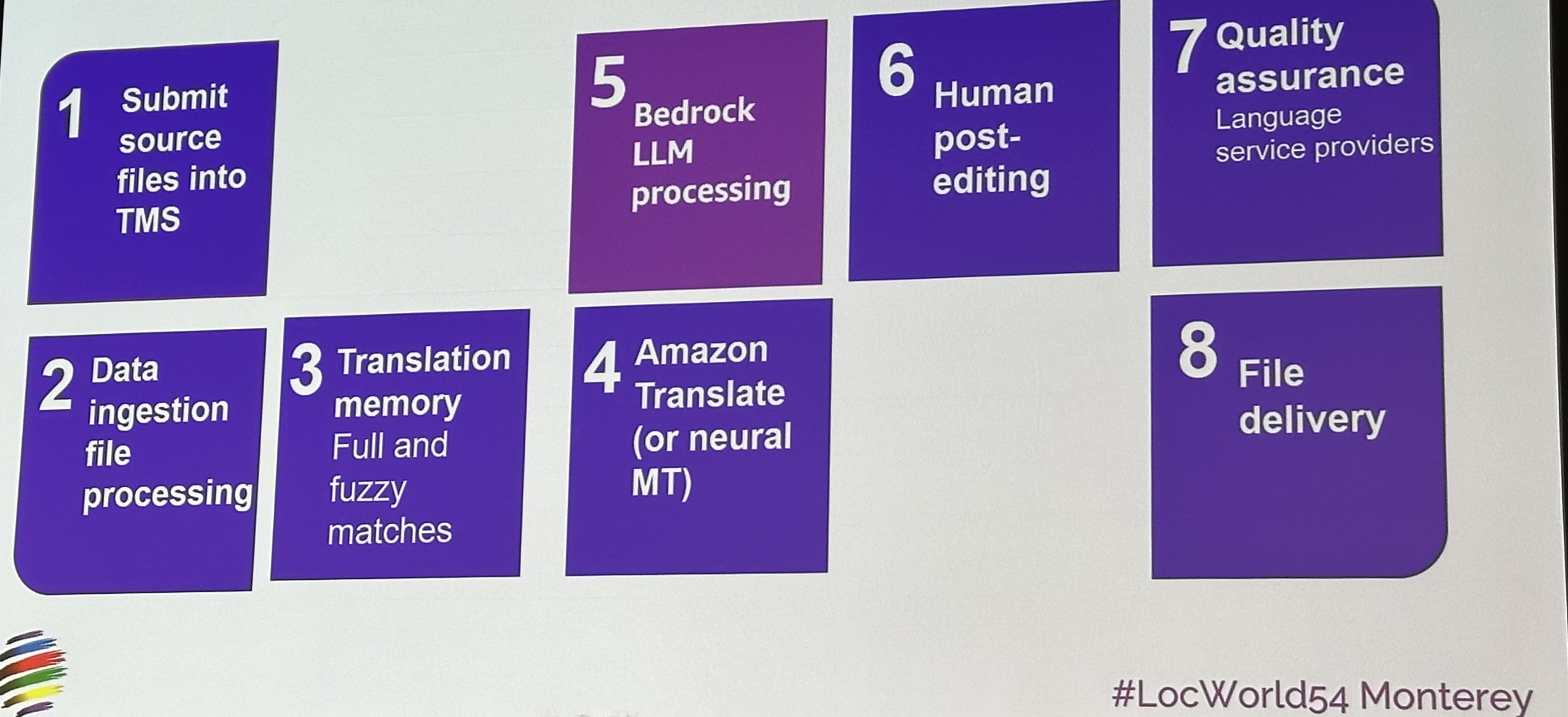

To be honest, I have too many thoughts from LocWorld to fit into one post, so this first entry is more of a Day 1 outline: a snapshot of what stood out to me after a day where about 90% of the discussions revolved around AI. It's more like a quick update on how my own understanding of AI's role in the localization industry has evolved: both conceptually and technically. Here, I'll only focus on what I found most striking: the shifts in mindset, knowledge structures, and how the industry is actually using AI right now.

Day 1 of LocWorld54 felt like stepping into an AI lab disguised as a localization conference.

Every session, panel, and casual hallway chat eventually circled back to the same topic: generative ai. Not as a buzzword, but as an operational foundation. The consensus was clear: being AI-native is no longer optional. It's the new baseline.

Rethinking the Benchmark

One of the biggest shifts I noticed is how we now measure quality.

Traditional static metrics such as BLEU and MQM are being reimagined as dynamic signals inside model training and supervision loops. A trained model can now monitor and score another model's output, using feedback to continuously improve performance.

The goal has changed. We're no longer solely chasing perfection but aiming for a good enough for deployment threshold, which is explicitly defined by humans.

This new version of "human-in-the-loop" looks different from the old one.

AI brings the translation to 90%; human reviewers determine if that 90% meets business needs. Their decision becomes part of the training data, which makes the system smarter the next time. Quality is no longer a static benchmark; it's a living, adaptive feedback process.

Localized Footprints and Data Gravity

Another recurring topic was the localization of AI itself.

Speakers described how “footprints” can be shaped by language and culture—training models on data that reflects local idioms, tone, and regional usage.

This is where large companies hold a clear advantage. They own massive stores of language resources such as translation memories, glossaries, and style guides. These assets enable precise fine-tuning and more domain-specific model performance.

For smaller teams, staying competitive isn't about matching scale.

Because AI evolves so rapidly, agility matters more than size. You're never truly behind—just one iteration away from a better method.

Terminology-Augmented Generation

I also found it fascinating to see the rise of terminology-aware AI.

Instead of relying on post-editing to fix term errors, some organizations now integrate terminology-augmented generation, allowing models to reference company glossary in real time during decoding.

When combined with TM-aware translation memory integration, this approach produces output that's consistent with brand voice and preferred terminology from the start.

It reframes quality assurance from something that happens after translation to something that's built into the generation process.

Looking Ahead

Day 1 of LocWorld felt like witnessing the localization industry redesign its own operating system.

The debate is no longer about whether AI belongs in the workflow—it already does. The challenge now is defining how to integrate it responsibly, creatively, and sustainably.

Day 2 will dive deeper into topics like automated LQA, hybrid workflows, and AI-assisted QA. I'll unpack those details in the next post.

As for the future?

Who knows what the next decade will bring.

The kitchen is still there, we just accidentally have better cooks and better cookers.